Is there a Future for Artificial Intelligence in HR?

Facial monitoring in personnel selection has come under a lot of criticism.

The use of artificial intelligence in job application management has suffered setbacks in recent weeks. For example, U.S. market leader Hirevue said on Jan. 12 that it would remove visual analysis from its assessment models and focus on natural language processing .

A German TV station proved to Munich-based startup Retorio that its AI algorithm can be heavily influenced by superficial changes like wearing glasses or changing the background.

The NGO EPIC filed a complaint against discriminatory and biased use of AI before the Federal Trade Commission a year ago.

The common thread is, that AI replicates the mistakes and biases of humans — no wonder, since it has been trained using human judgments. In short, AI makes the same wrong decisions in a much more efficient manner. Does this mean that the topic of “automated applicant assessment” is at an end?

Hardly. Psychologists have been studying the issue of assessment errors for decades. Dozens of assessment systems, particularly in the U.S., have had to prove that they do not favor or discriminate against anyone based on race, gender, age or sexual orientation. In fact, the less “human judgment” involved, the more likely a decision will be fair. Work samples and scientific testing procedures are more objective than interviews, procedures involving multiple — heterogeneous — observers more neutral than those relying on single judgment.

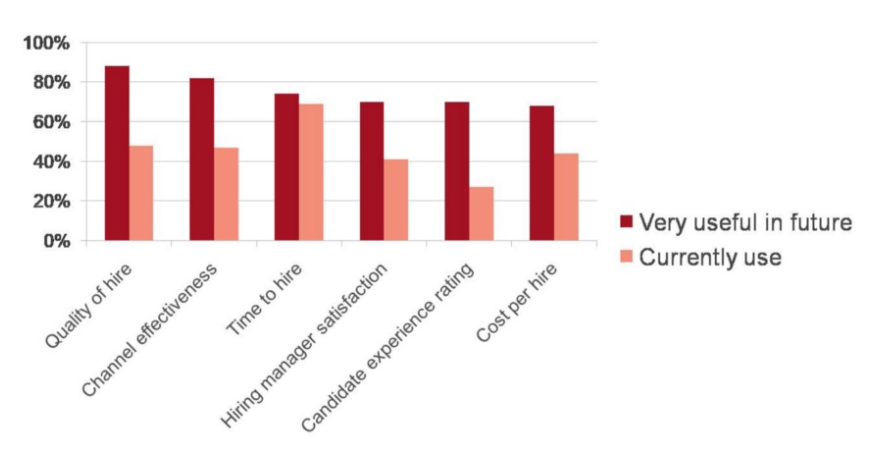

Nevertheless, the prognostic quality — i.e., the prediction of future professional success — remains thin. Hardly any procedure can predict more than 25% of success, so three-quarter are dependent on factors not included in the assessment procedure.

Within a few years, trained AI has managed to replicate the flawed human decision-making process. It has a good chance of surpassing it within the next few years, just as computers have beaten the best human chess or Go players. What must a good selection process be able to do?

1. Find the right people

Current testing procedures have been digitized for many years. The evaluation of biographical elements can also be made much more precise and differentiated by an AI. As Katie Bishop writes, social media scraping is already widely used by recruiters. Advances in scientific recognition of emotions already exceed the subjective impression of most trained observers. The processing of natural language (NLP) is also already well advanced.

What AI systems still lack is ongoing feedback. Once assessment algorithms can include multi-year probationary data to review their decisions, the quality of those decisions will significantly exceed that of their human counterparts, because unlike a human hiring manager, the machine can take in and evaluate a nearly infinite amount of information over and over again if

- such data are allowed to be processed. The storage and linking of extensive employee personal data is subject to various regulations for good reason, and the importance of privacy has increased significantly in recent years.

- the suitability for an abstract task is checked. In many cases today, teamwork is important — here, success is largely dependent on how someone fits in with the others on the team and where he or she complements the others. If Linda was the perfect fit for Team 1, that doesn’t have to be true for Team 2. Every sports coach knows that a successful team does not come from just adding up individual skills. Technically, this would probably be solvable at some point by providing detailed information about the other team members — but then a lot of willingness to accept the transparent employee is required.

2. Gain the acceptance of applicants

In many industries, it is not jobs that are in short supply, but qualified employees. Pre-selection is already a problem: Not everyone likes to talk to a chatbot or have personal information (picture, voice, what was said …) stored and evaluated by machines. The promise of an objective process will certainly find friends — but many applicants will also expect an impression of the environment, of colleagues, a personal address — and last but not least, people also adapt their behavior to their counterpart. If the counterpart is a machine, this ability of the applicant might not be challenged.

3. Comply with legal rules

A procedure must not only be fair — it must also prove it. Self-learning systems still have problems in this respect. Since the human being does not even know what patterns the machine has found, the proof is not self-evident. Given the known shortcomings of human observers, however, there are also opportunities here: AI could well check its own processes to see whether protected groups are systematically disadvantaged.

So what’s about to come?

Bill Gates wrote, “We always overestimate the change that will occur in the next two years and underestimate the change that will occur in the next ten.”

The large steps of natural language processing and recognition of faces and emotions are mostly done. AI has caught up with the abilities and the flaws of human judgment, overcoming the latter will only be a minor step. It seems a safe prediction that in the next 10 years we will find much more AI-based decision support in talent management than self-driving cars on our roads.

Medium – May 2021